Every company needs a website. The challenge for many is keeping that website up-to-date. Technology changes; algorithms and SEO change; how your customers use the web changes.

With all of a website’s moving parts, it’s easy for it to fall into disrepair and even cost you revenue. If your site can’t be found, doesn’t align with your brand and isn’t functional in today’s standards, then your prospects and customers will move on to another site -- likely a competitor’s.

Start with a Website Audit

When your competitors outrank you in search, that alone is good reason for a website audit. You need to know what is causing this and why it matters to fix it. In a nutshell, you can’t fix what’s broken if you don’t even know it’s broken.

We conduct a lot of website audits, as we recommend most digital projects start with some sort of technical audit. Again, knowledge is power. And for many of our clients, they have no idea what it means when a website and SEO audit returns messages like: 4xx status error, uncompressed JavaScript, duplicate meta descriptions, Google thinks your site stinks (just kidding...sort of).

That’s why we created this listing of web errors. What should you fix? What can be ignored?

Not only do we want to tell you what the error is, but we want you to understand how it affects your site overall, your opportunity for online lead generation and your bottom line. Then you can make an informed decision.

___________________________________

Get a Free SEO & Website Audit: Audit My Site Now

___________________________________

Understanding the Top 16 Technical and SEO Website Errors

This listing provides the most common issues we have found when conducting technical and SEO website audits.

Side note: If you’re looking for a full, comprehensive digital audit that includes competitor insight, online buyer’s journey analysis, system and tool recommendations, KPIs and goal setting, and a multi-phase strategic action plan, we do that too! Find out more here.

1. Crawlability and Site Architecture

Your website architecture is the structure of your website. The site architecture should be strategically mapped out before a website project begins. It should structure your pages in a consistent format so users and search engines can easily navigate the site.

When important pages can’t be found through the navigation, have hidden options or have the same content on multiple pages, users get lost and search engine bots get confused. When crawl depth hits more than four pages (meaning its takes more than four clicks to get somewhere), it’s likely a bot will stop crawling the site entirely.

In summary, when a bot can’t find your pages or stops crawling your site because it’s too complex, your site is not appearing in search results.

A site architecture should be clean, not complex, and show how the main navigation, secondary navigation, nested pages and internal links all work together to make the site easy to navigate and understand.

2. Robots.txt

Flagged for Robots.txt? What the heck does that mean? A robots.txt file tells search engine bots what pages or files the bots can or can’t request from your site. It should be used to manage crawler traffic to your site, and occasionally can be used to keep a page off Google, depending on the file type.

Note: There are guidelines around this. In most cases, a noindex tag is used to keep a web page from being crawled.

Why even have robots.txt in place? It increases a site’s crawling and indexing speed. However, formatting errors are quite common. When this happens, it can block Google from crawling and indexing specific pages or even an entire website. (And yes, we’ve uncovered this in audits!)

3. URL Structure

Search engines and people do read URLs. It’s one of the first ways they determine what a web page is about. Poor URL structure negatively affects page indexing and ranking.

Check out the following example of a URL that makes it difficult to determine what exactly the page is about. Bad URL:

-

https://jojoshoes.com/running-and-fitness-shoes/running-shoes/fitness-shoes-black/adult-shoes-comfort-bad-knees-running-16754/

Now, look at an example of a URL from our site that is simple, clean and immediately tells you the page is about our expertise in digital marketing (feel free to click through).

When it comes to optimizing URLs, follow these best practices:

-

Use hyphens or dashes, not underscores, as bots may interpret these as part of the word.

-

Don’t use capital letters - they complicate readability.

-

Keep URL length under 100 characters.

-

And most important, just keep it simple.

4. 4XX, 5XX and 404

When a website audit returns 4xx and 5xx status codes, this means the web server couldn’t return the request. 4xx codes are typically due to syntax errors, while 5xx codes are server connection problems.When this happens, users get a 404 error.

Broken links on your site also will give users a 404. And the more 404s, the more likely users will ditch your site. Simply put: Fix broken links and server issues.

When an entire page has been removed, put in place a 301 redirect to a relevant page so users land here instead of getting a 404 error.

5. Internal Links

If your site gets flagged for internal links, it’s due to either too many or too few. The key when it comes to internal linking (a link within a page that leads to another page on your own site) is using the appropriate number of links to help users navigate the site.

Internal links are also important for Search Engine Optimization (SEO) as they help search engine bots understand the content and flow of your website pages.

Basically, you don’t want too many internal links. This will make pages overwhelming and confusing. If you have too few, this means there is an opportunity for website optimization.

6. Sitemap

When a website’s sitemap doesn’t match the number of pages crawled in an audit, it can signal poor site crawlability and indexing issues.

Submitting a sitemap to Google Search Console is a great way to highlight the pages you want to show up in the search engine results pages. It’s not a guarantee these pages will be indexed, but it does helps bots navigate a website faster and see new content quicker.

7. Uncompressed JavaScript and CSS Files

It’s all about speed, baby - the faster, the better when it comes to load time of your website pages.

If your site is dinged for uncompressed JavaScript and CSS files, it means compression was not enabled to reduce file sizes, which slows down your site. When page load times are slow, users leave quickly. When users don’t like your site, neither do the search engines.

8. Page Speed

While we are talking about speed, did you know most website users expect a web page to load in less than two seconds? Yep, that’s right. You have two seconds to get in front of their face.

If your pages load slowly, you are likely losing people before you even get a chance to introduce your company. Page speed can be affected by multiple things, from poor web hosting and unoptimized images to inactive plug-ins and disabled compression.

9. Security Alert!

Within the last year, we have seen more and more sites flagged as ‘Not Secure’. Luckily, this can be a relatively simple fix.

In 2017, Google pushed all sites to acquire an SSL (Secure Sockets Layer) certificate to allow the search engine to verify websites as legitimate and secure. Sites without an SSL now appear with a 'Not secure' tag before the URL, which turns users away.

Key takeaway: In an era of security breaches and identity theft, get an SSL to secure your site for users.

10. Duplicate Content

According to research conducted by SEMrush, a leading marketing analytics software company, the most common SEO issue affecting websites is duplicate content. This occurs when substantive blocks of content within a domain completely match or are very similar.

When you have duplicate content across your website, you are essentially diluting your SEO efforts. Search engines don’t know which page to choose, and your own pages are competing against one another.

Also, search engine algorithms are programmed to place a higher value on unique content, as a user doesn’t want a dozen pages with the exact same content.

11. Header Tags

Header tags, also known as H1s, H2s and H3s, are important for creating a hierarchy on each web page for the search engine bots. The H1 is the most important, H2s are next, and so on.

There should only be one H1 tag on any page, but there can be multiple H2s and below. Think of it like this: The H1 is the title of book, while the H2s are the chapters. The H3s are subheadings in the chapters.

Headers should include keywords and should also be captivating and interesting -- not always an easy thing to do. This is why it’s common to see missing or duplicated headers.

If you want the search engines to correctly ‘read’ your website, take time to write and implement SEO-optimized headers.

12. Title Tags

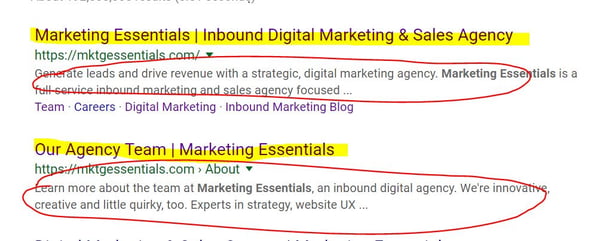

Website title tags are one of the most important on-page SEO elements. The title tag is the clickable headline that appears in search results.

To return the best results for users, search engines look for title tags that follow best practices. This means they are unique to each page, they are no longer than 70 characters and they provide relevant information about a page’s content.

One of the biggest errors with title tags is having duplicates across the site instead of a unique one for each web page.

Image shows title tags highlighted; meta descriptions circled.

13. Meta Descriptions

Meta descriptions are the descriptions that appear below the title tag. Their sole job is to provide key information that will entice the user to click through to your site. Sites often get flagged for having duplicate or missing metas.

While metas don’t directly affect SEO, they do affect click-through rate (CTR) to your site. And when CTRs are low, search engines see the site as not valuable to users.

14. Images

Image errors are typically due to missing alt text. Alt texts are textual descriptions of images to help a search engine bot understand what the image shows. For websites with lots of imagery (and because users like images), alt text is very important.

Even more important, alt text is useful for visually impaired people using digital readers. These readers use the information in alt text to describe images to the listener.

15. Mobile and Dynamic

It’s no longer an option: Your website must be mobile responsive.

Your site will be be flagged if it is not developed so the layout and user experience can be adapted by all browser window sizes and mobile devices -- like phones and tablets.

Implementing a mobile responsive design likely means rebuilding your site, and with the dynamic designs today, you can target your audience even more effectively with a custom experience.

Essentially, a dynamic design allows you to provide a specific experience for the mobile version of your site, such as showing different content or optimizing for different search queries than the desktop version. You can have different versions of a single page on a single URL.

In summary, what do you do with your website?

You start with an audit and map out a strategic plan of how to make your website work for your company. With 3.5 billion Google searches every day, you can’t afford not to.

Free Website & SEO Audit